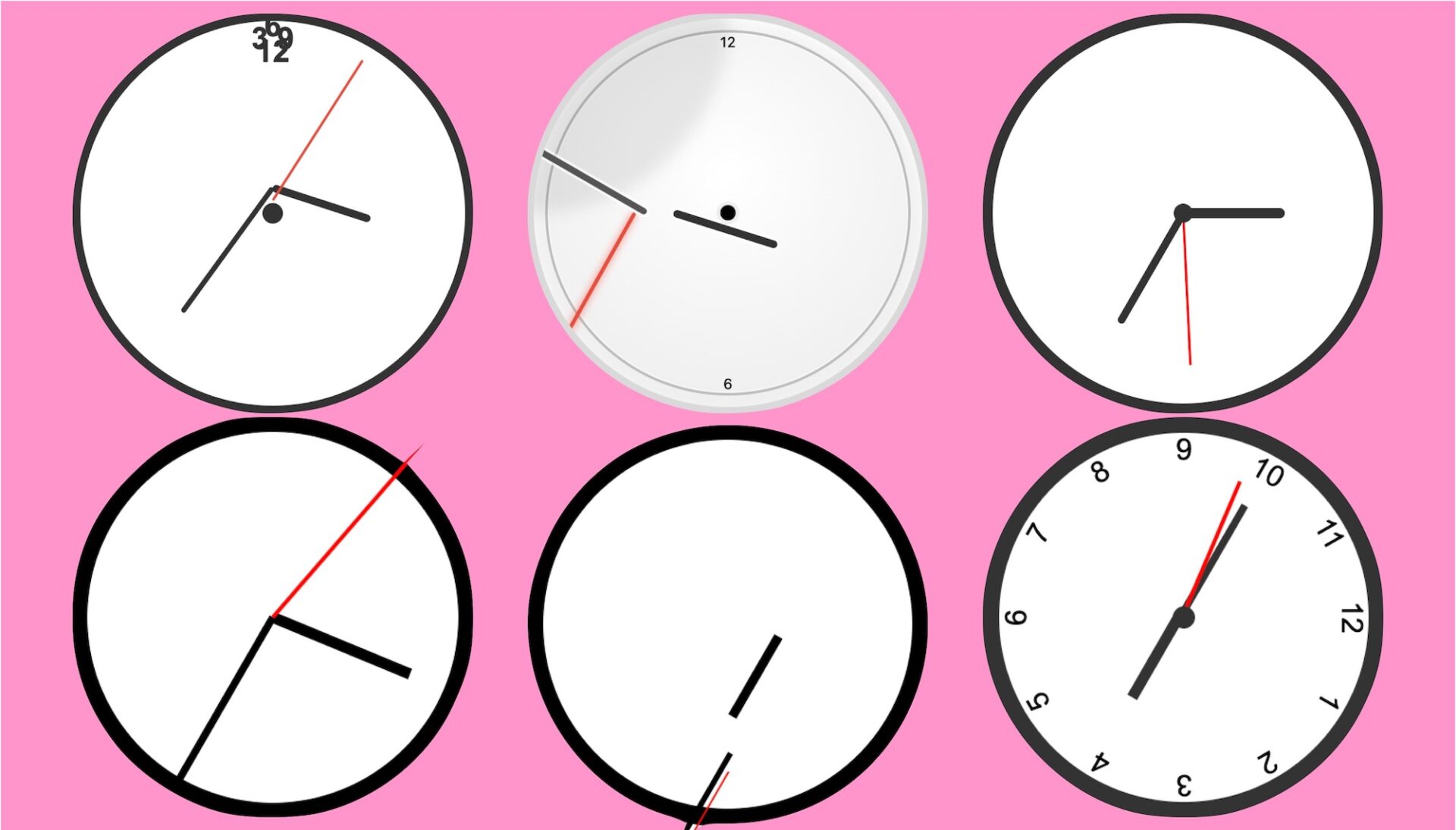

I can’t stop thinking about the website AI World Clocks. The premise is simple: all the major AI models on the market are asked to code a clock, and you get to see the results. The catch: They’re all beautiful disasters.

The numbers seem to be consistently in the wrong place, and sometimes are outside the clock itself. The hands may or may not be in the correct position, and sometimes are floating off in space outside of the clock. Even the clocks that are pretty good look…off, somehow.

“Telling time is a very human thing, very easy thing for us to do, and something you learn at a very young age,” Brian Moore, the artist behind the site, told me in an interview. “It’s kind of fun and funny to turn the tables—to see something a human could do very easily and a computer cannot.”

I’ve kept this site open throughout the process of writing this article and can confirm: It’s very funny. But why is the AI so bad at this?

Well, one thing to keep in mind is that the site limits all models to around 2000 tokens to generate its clocks, and uses the same prompt for all models. You could, given unlimited computing power and a very specific prompt, get a better clock from an AI system. But the question remains: Why is this so hard for AI systems? The reasons point to the way AI systems work.

AI is bad at telling time

AI isn’t just bad at making clocks; it’s also bad at reading them. A 2025 study by technologist Alek Safar suggests that humans are 89.1 percent accurate at telling the time on analogue clocks while the top-rated AI is only 39.4 percent accurate.

That study only hypothesizes about the reasons this might be, but the potential explanations are all interesting. The first is that there simply aren’t enough pictures of clocks in the datasets for AI models to accurately learn to tell time. Another is that images of clocks are difficult to describe using language accurately, which is something large language models require in order to process them.

Another 2025 study conducted by the School of Informatics at the University of Edinburgh also found that all major large language models have trouble understanding the time when shown an image of an analogue clock.

“Our findings suggest that successful temporal reasoning requires a combination of precise visual perception, numerical computation, and structured logical inference that current MLLMs have not yet mastered,” says the study.

As I said, neither of these studies claims to completely know why AI isn’t great at these tasks. There are some interesting factors to consider, though, including the datasets that AI systems use to understand the world.

Something you need to understand is that large language models, the technology referred to as “AI” in contemporary parlance, don’t really do math. This is counterintuitive, because we’re used to thinking about computers as mathematical machines, but modern AI technology is based more on pattern recognition. Clocks are an interesting example of this at work. The systems, instead of calculating the angles or positioning of the hands to tell the time, are attempting to guess the time based on pattern recognition. Which, come to think of it, isn’t that different from how I personally tell time when looking at a clock—AI systems are just bad at this. And there are some interesting reasons why.

The 10:10 problem

Go to your image search tool of choice and type “watch,” then keep track of what time you see on the watch faces. You’ll notice quickly that a majority of the analogue watches are set to ten after ten (10:10).

Why that particular time? Because marketing. Watch and clock sellers have long known that setting a watch to 10:10 makes it more attractive to would-be buyers. A 2017 study published in Frontier in Psychology suggests this might be because the two hands angles resembled a human smile. Another consideration is that, at 10:10, the hands don’t cover the logo, brand name, or any complications like the date. It makes for an attractive photo, basically, and has become standard for watch and clock marketing.

One consequence of this: many of the images of watches and clocks on the internet are set to 10:10. This in turn means a major chunk of the clocks in AI datasets are set to that same time. Ask any AI system to draw you a clock and, most of the time, they’ll set it to 10:10—sometimes even if you ask for a different time. Which is part of how Moore ended up making his website of hilariously bad AI clocks.

“I asked an image generator to give me an image of a clock at a particular time, and it definitely could not do that,” he told me. “I’d get a lot of 10:10s, even though I gave it a lot of specific prompting.” Moore isn’t alone here—at least one Reddit user noticed this while trying to generate clocks set to a specific time.

This is just one small rabbit hole about clocks and watches, granted, but it points to something about the data AI systems have access to that can affect their abilities. Another theory that comes up in discussions about this: drawing clocks is a common test for dementia, which in turn means there are some very inaccurate drawings of clocks on the internet.

The people who make AI systems don’t fully understand how they work, so a lot of this is just guessing. And that’s what makes the AI clock website so fun: it’s a glimpse at how these systems work.

The post Why does AI suck at making clocks? appeared first on Popular Science.

Why does AI suck at making clocks?

by Cathy Klein